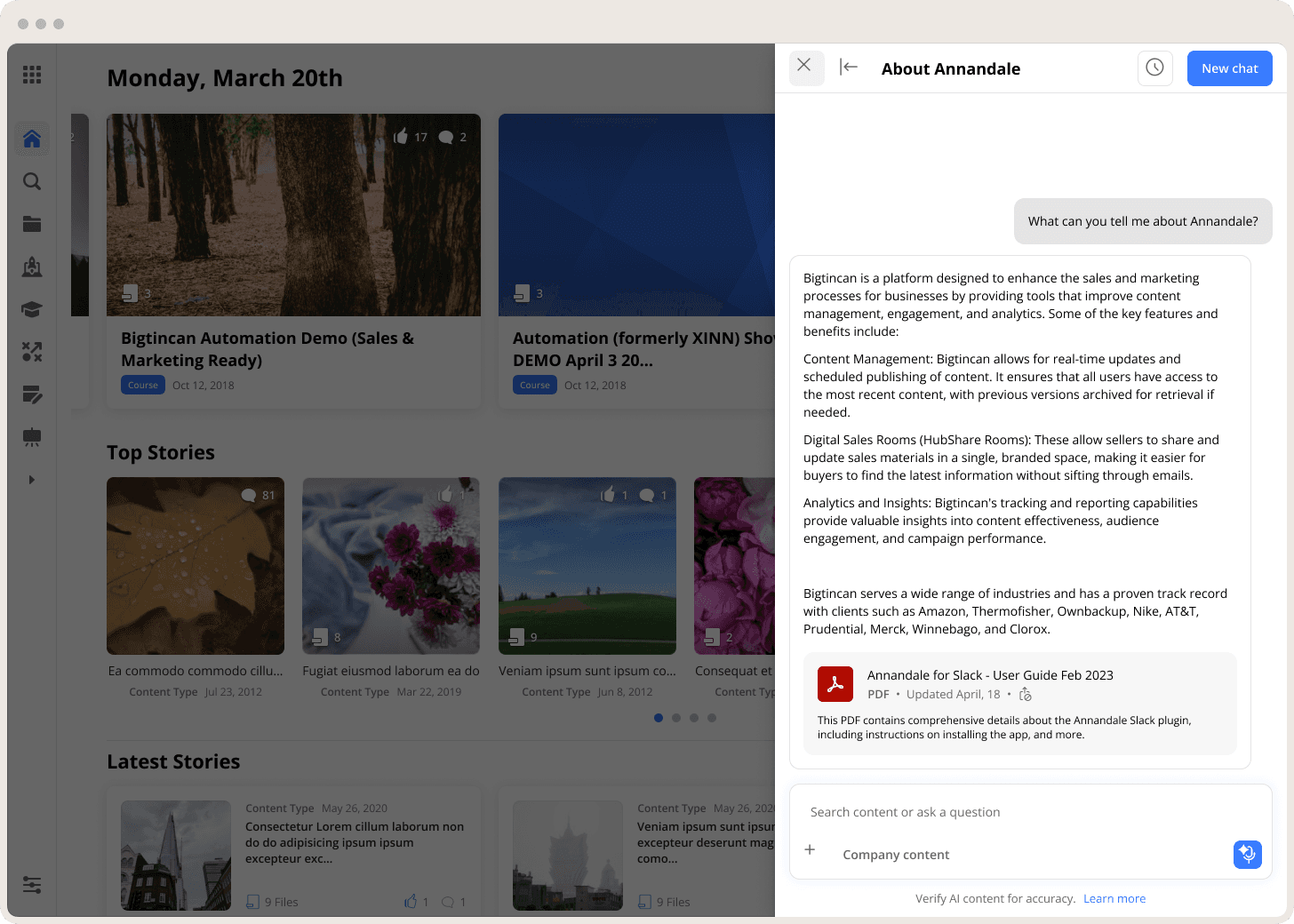

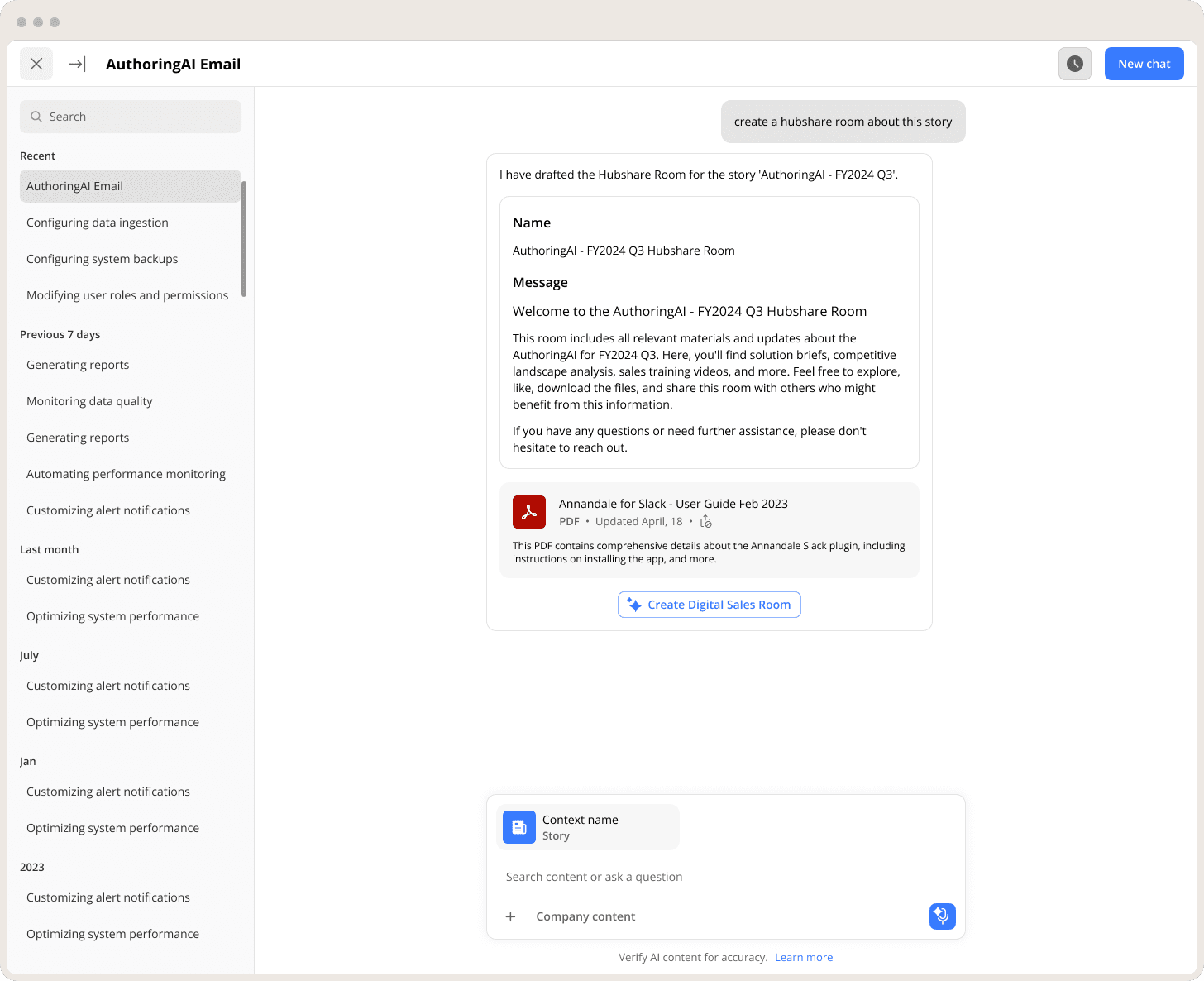

The context

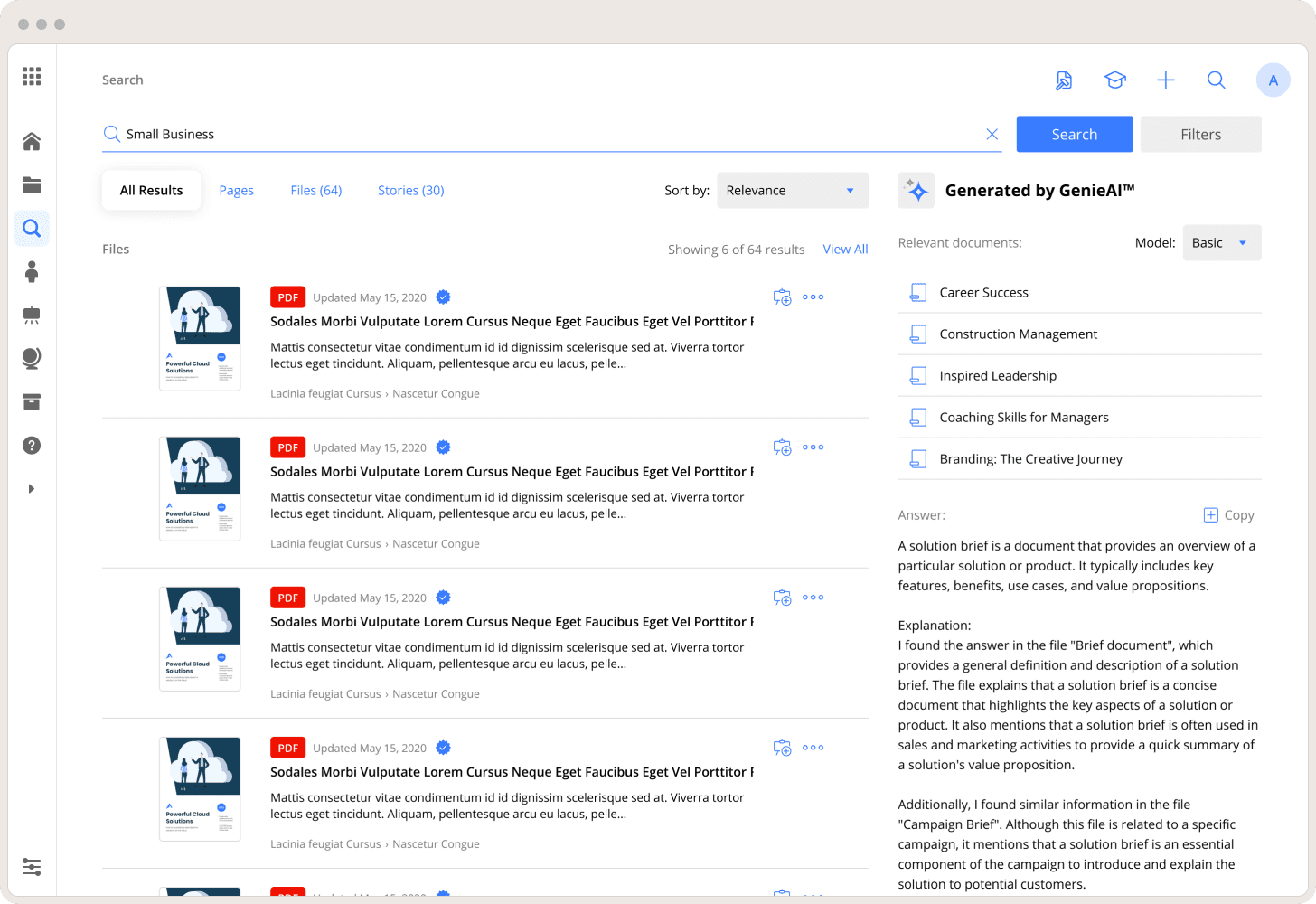

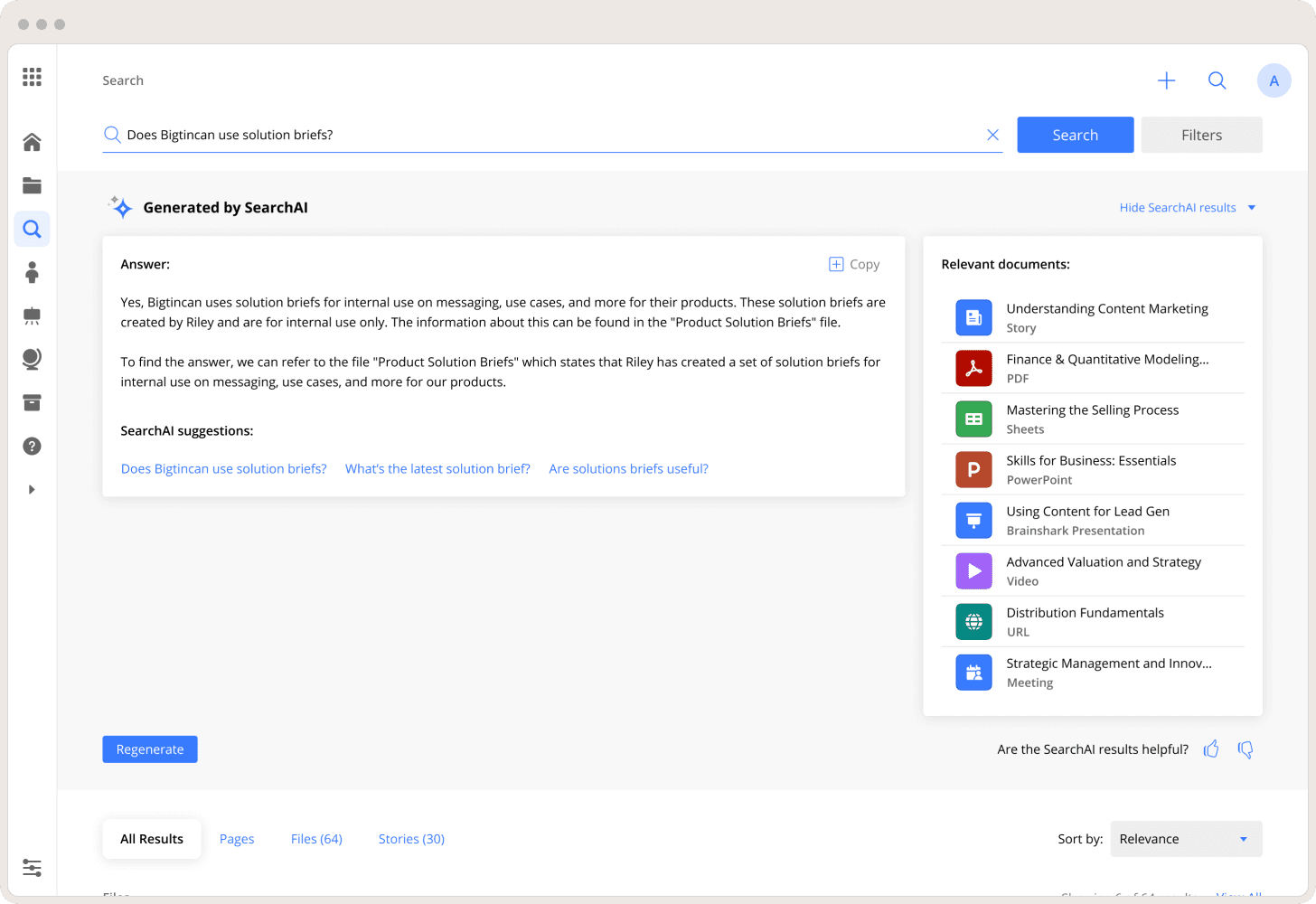

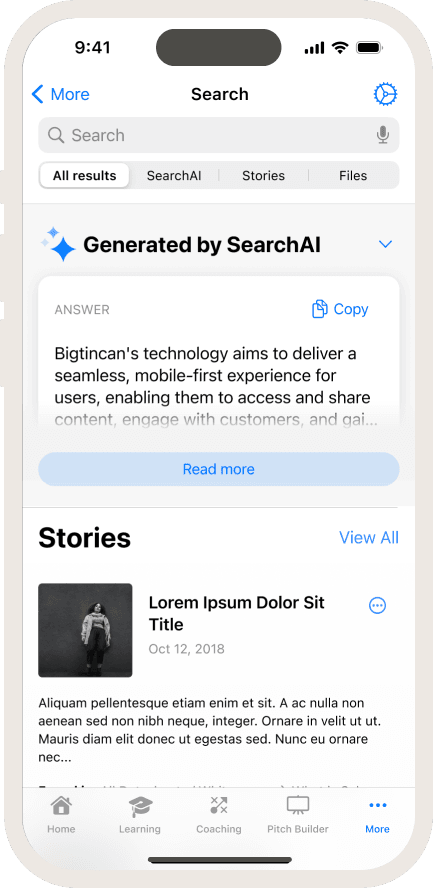

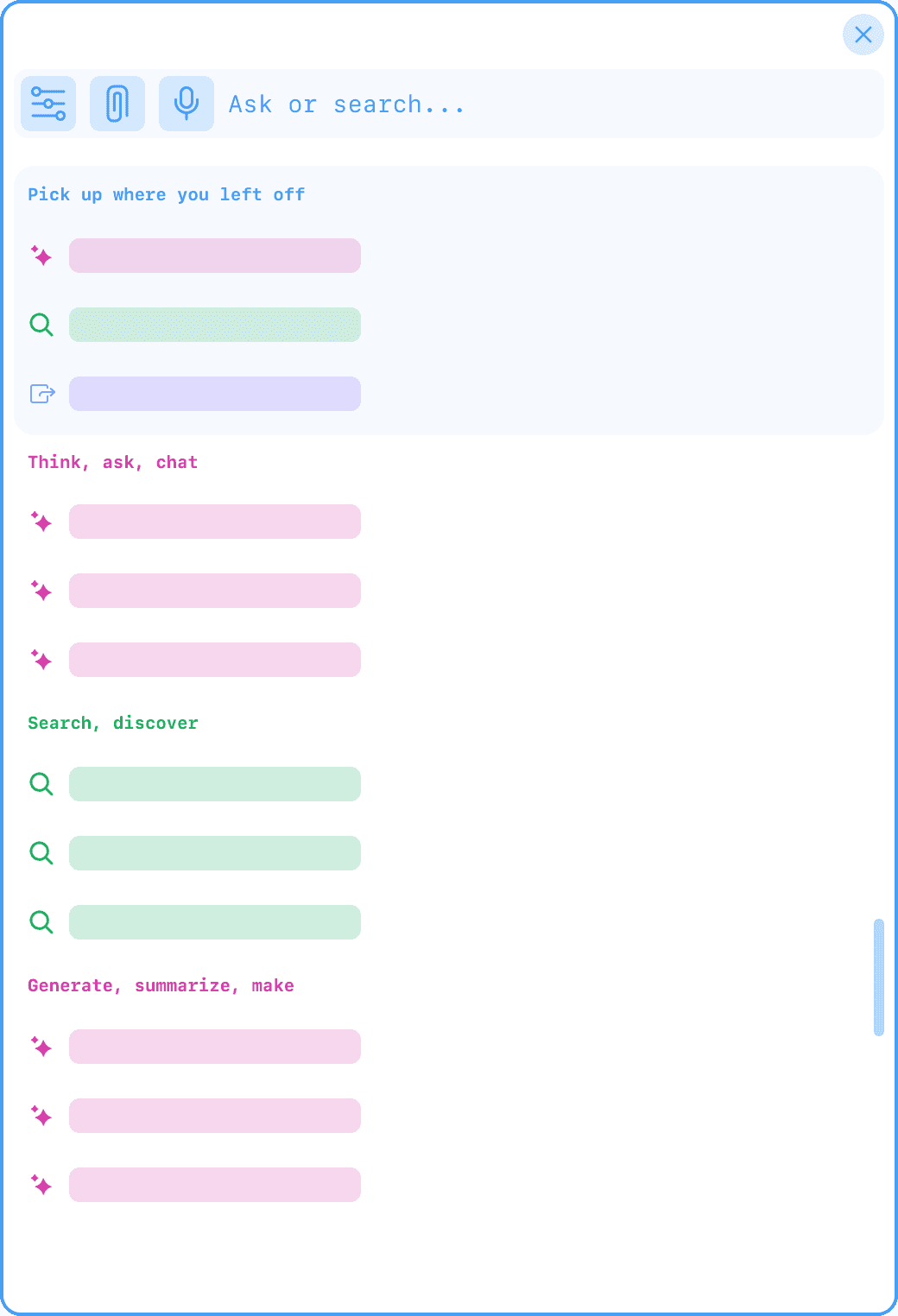

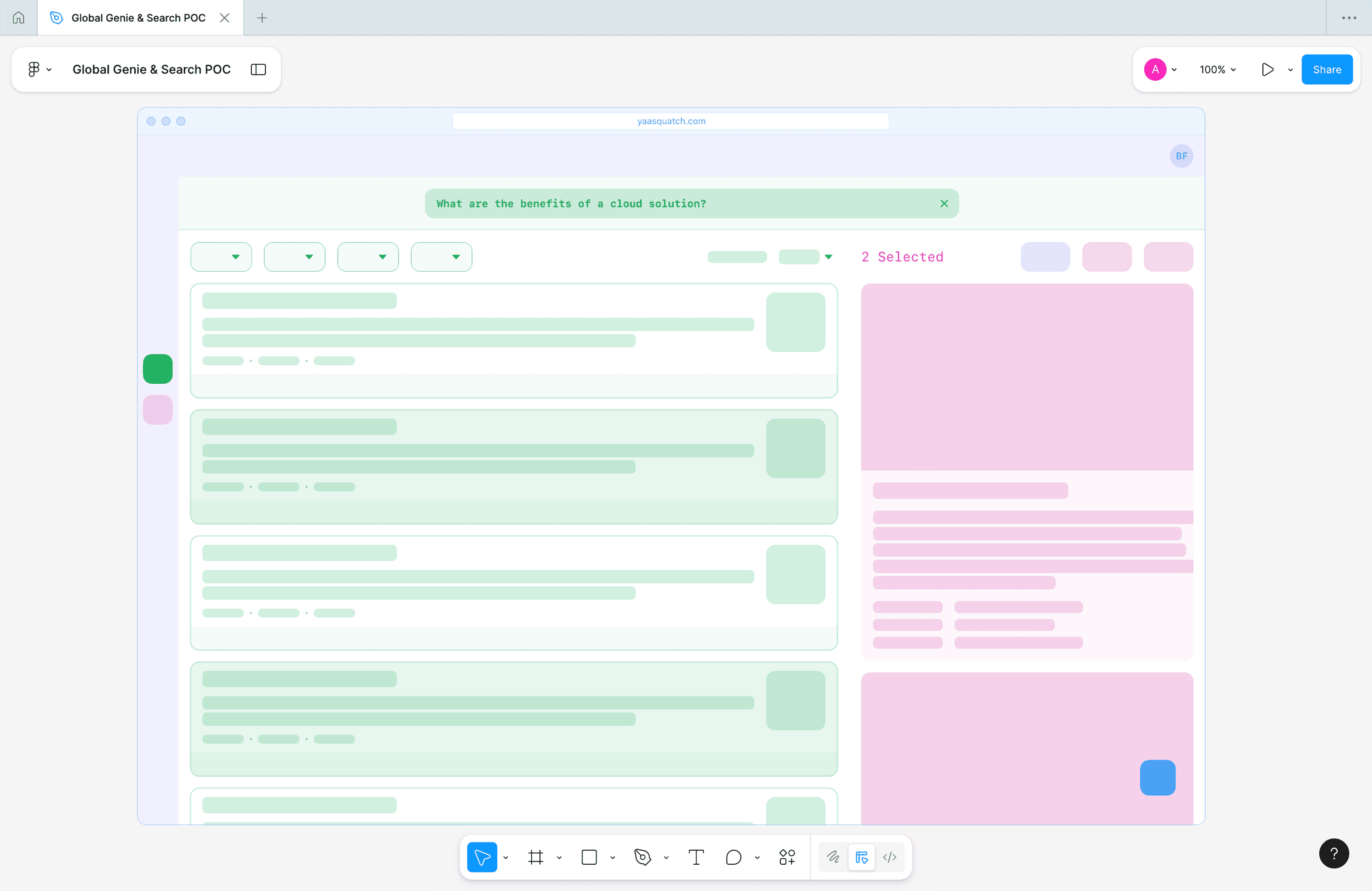

By 2023, AI had rapidly become table stakes in enterprise SaaS. Customers expected semantic understanding, instant answers, and intelligent assistance — but only if the results were accurate, explainable, and trustworthy.

At Bigtincan, AI sat at a critical intersection:

Content discovery

Sales productivity

Platform credibility

When it worked, it accelerated adoption.

When it didn’t, it eroded trust faster than almost any other feature.